This article distills the key insights and examples from the live session and expands on them into a structured, practical guide to cloud BI migration and modernization.

🎥 Watch the full webinar recording:

Moving BI to the cloud is the right call, as long as the migration is also modernization. Treat it like a hosting change and you get lift-and-shift. Treat it like a redesign and you gain speed, predictable cost, trust, and AI readiness.

In a candid discussion, Astrato’s Martin Mahler and Propelling Tech’s Alejandro Martinez argue that rehosting is not modernizing. The design choices you make during migration determine whether you truly modernize, or simply replatform problems at greater scale and cost.

“Performance equals cost in the cloud. The longer it runs, the more you pay.” - Martin Mahler

A pharma team learned this the hard way. They moved to cloud BI expecting instant improvements, but performance got worse. On-prem shortcuts – extracts, workbook logic, models tuned for RAM – were lifted into a live, elastic environment where every slow join and extra byte shows up on the bill.

This piece distills the six common pitfalls the speakers see in migrations, and the modernization playbook that beats them.

1) The myth of lift-and-shift

Cloud BI is often sold as a simple move: the same dashboards, just hosted elsewhere. That mindset is the root of many failures.

“Cloud BI is not a lift-and-shift. It’s an architectural change and a different design.” - Martin Mahler

“The model that worked on-prem won’t apply in the cloud, you need to re-imagine.” - Alejandro Martinez

On-prem BI thrived on in-memory tricks, extracts, and workbook-level logic. Cloud-native BI pushes compute down into the warehouse and expects live, governed metrics. Treating the cloud like a new zip code for the same stack backfires: you inherit nightly reloads, brittle pipelines, and workbook sprawl, now with network latency, concurrency pressure, and cross-cloud data movement in the mix.

Martinez offers a blunt analogy:

“Think of strapping a machine to a horse instead of bringing a tractor, you keep the speed of the horse.”

A useful mental model is Snowflake’s original innovation: decoupling storage and compute to unlock elasticity. Cloud BI needs the same rethink. Push heavy logic to the warehouse, stop hoarding extracts, and make the BI layer about interaction, not about being a mini warehouse.

How to solve it

Design for live or incremental data, centralize calculations in a semantic layer, and plan for concurrency with clustering, partitions, and materialized views. Rebuilding some assets is not waste, it is the cost of unlocking elasticity.

2) The cost illusion

Many teams budget for subscription licenses and forget the rest: compute, storage, egress, AI add-ons, and the very real price of inefficient queries.

“Bad reports show up as database consumption. That’s the pain.” - Alejandro Martinez

“Teams are surprised by egress fees and premium capacities… the jump can feel like four times what they paid before.” - Martin Mahler

The cloud’s consumption model is unforgiving. A sluggish dashboard does not just frustrate users, it burns credits. “Cheap to pilot, pricey to scale” is a common pattern when seat licenses pile up and warehouse usage is left ungoverned.

Costs also spike when teams bolt on AI features priced per request or model before they have fixed query efficiency or adoption. The pattern is predictable: cheap pilot, pricey scale, seat creep on the front end, credit burn on the back end.

How to solve it

Manage by unit economics. Track all-in cost per 1,000 dashboard views (BI plus warehouse), set budgets and alerts, cache or materialize hot paths, and review query plans on high-traffic assets. If you cannot measure the cost to serve, you cannot control it.

3) Old problems, new zip code

Migration does not fix metric drift, brittle ETL, or failed reloads; it often amplifies them.

“If you just lift and shift the mess, the cloud amplifies the problems.” - Martin Mahler

“Migrate what’s used. Deduplicate. Use telemetry, query IDs, to see who’s hitting the same dataset.” - Alejandro Martinez

Legacy BI encouraged one-off logic embedded in workbooks and scripts. When that logic is scattered across hundreds of dashboards, every change breaks something. In the cloud, that brittleness is magnified by scale and by the simple fact that more teams now expect access.

How to solve it

Use warehouse telemetry (query IDs) to spot duplicate assets and consolidate to a single, governed dataset before you migrate. Standardize definitions in a semantic layer, adopt CDC or incremental data movement, and wire in tests and lineage. Before migrating, audit usage, retire duplicates, deprecate the unused, and move only what matters.

4) UX doesn’t improve by itself

End users do not care where servers live. They care whether the experience is fast, guided, and lets them get work done without tab-hopping.

“You just want to be in one app that lets you do the work.” - Alejandro Martinez

The self-service promise stalled because most of the effort lives in data plumbing. When business users are asked to wrangle joins, time intelligence, or security rules, adoption drops and Excel creeps back in. Shifting logic to the warehouse changes that balance: the BI layer can become a product-like app with sub-second paths, writeback, workflows, and role-aware views, not a thin veneer over a tangled pipeline.

Treat each dashboard as a job to be done. If users cannot filter, take action (write back), or complete a workflow in one place, they will bounce to Excel.

How to solve it

Treat BI like a product. Measure weekly active users per licensed users and median load time as north-star KPIs. If it is not fast and task-centered, it will not be used.

💡 Read more about productized analytics here

5) Governance in a new control plane

In the cloud, where you enforce access controls, identity provider plus data warehouse, matters as much as what the rules are. If you scatter rules across BI apps, you get gaps and inconsistency.

“With every copy of data you lose governance. Fragmented security across tools kills consistency.” - Martin Mahler

“Don’t handle policies in the BI tool, enforce them where the data is.” - Alejandro Martinez

Legacy setups often relied on app-level rules that were easy to bypass.

“In legacy BI, someone could add an asterisk and give full access, it was easy to ‘hack’ without being a hacker.” - Alejandro Martinez

In a cloud posture, you want single sign-on via your identity provider and row- or column-level security enforced in the warehouse. Every extract or shadow copy multiplies the places you must reapply and audit policy.

“Use a zero-copy, live-query posture so you inherit security once. Involve InfoSec early and use SSO.” - Martin Mahler

For European buyers, data residency and portability are not abstract. Fewer copies mean fewer cross-border headaches, and enforcing policy where data lives makes GDPR compliance simpler to evidence.

Case in point: one manufacturer rolled out a chatbot over mixed data copies. Suppliers could query sensitive information about competitors, no breach required, just bad policy placement. Enforcing access in-warehouse would have prevented it.

How to solve it

Centralize control. Use SSO via your identity provider, enforce access in the warehouse with RLS or CLS, and go zero-copy to stop policy drift. Measure coverage, percent of datasets under policy, and mean time to revoke access.

6) Future-proofing, or boxing yourself in

The most expensive migration is the next one. Choosing closed stacks today can lock you out of tomorrow’s opportunities, especially as AI and BI converge.

“Vendors aren’t saying BI and AI separately anymore, it’s AI plus BI as one concept. You don’t want your BI behaving like your data warehouse. Keep the core open and modular.” - Alejandro Martinez

“If the best model is on Google but you’re locked into Azure, you’ve boxed yourself in.” - Martin Mahler

Beyond static reports and classic dashboards, teams now need embedded analytics, operational apps, Python or advanced analytics inline, and conversational interfaces. If the tool you pick only does reporting, you will bolt on a second tool for the rest and recreate sprawl.

Vendor roadmaps are not neutral. Some steer BI deeper into their own cloud or CRM ecosystems. That may be fine until the best AI model, data service, or hosting option sits elsewhere. Portability is a feature, not a nice-to-have.

How to solve it

Prefer API-first components that embed cleanly, keep logic near data for in-warehouse AI, and avoid single-vendor roadmaps that trade short-term convenience for long-term lock-in.

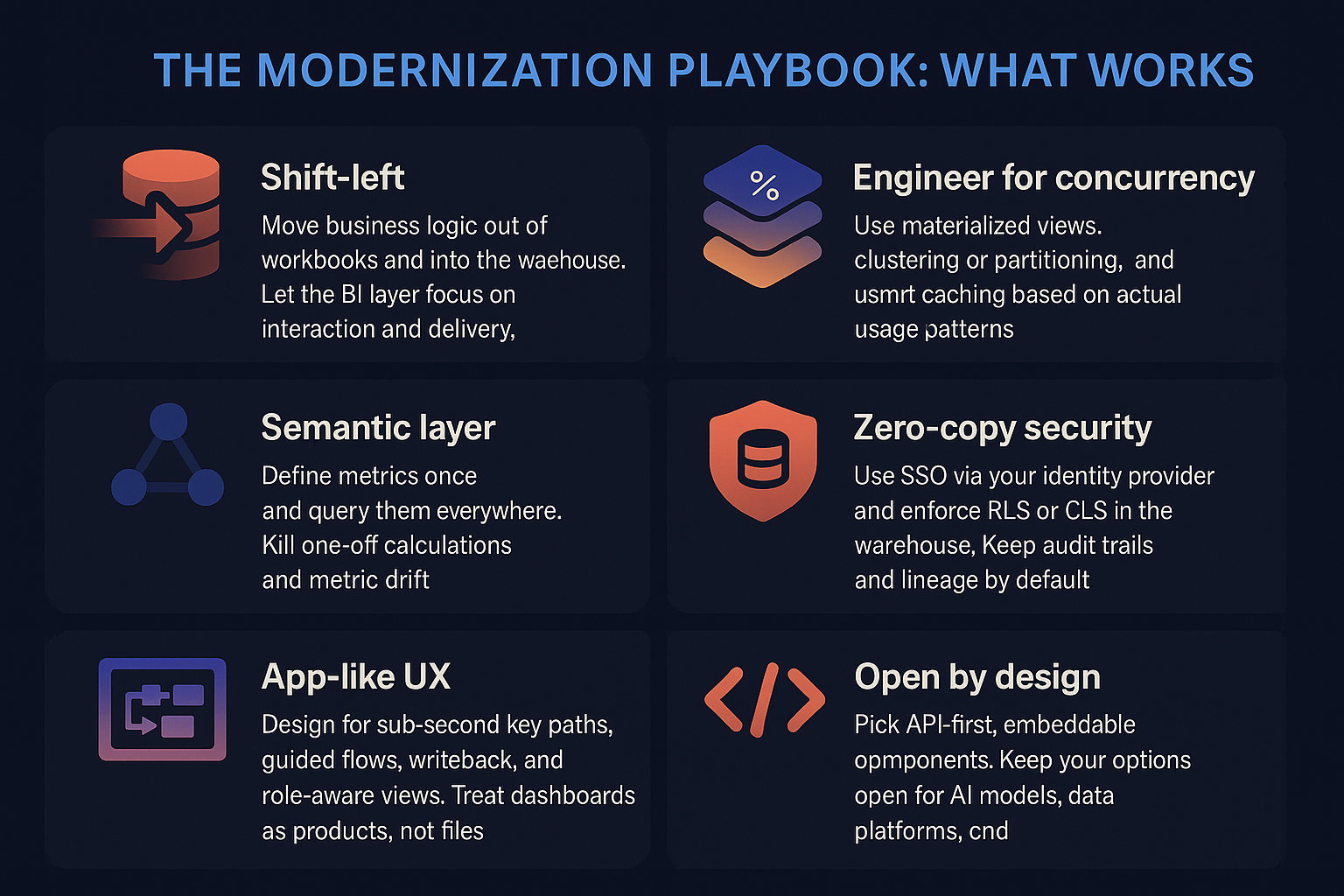

The modernization playbook: what works

1️⃣ Shift left

Move business logic out of workbooks and into the warehouse. Let the BI layer focus on interaction and delivery.

2️⃣ Semantic layer

Define metrics once and query them everywhere. Kill one-off calculations and metric drift.

3️⃣ Engineer for concurrency

Use materialized views, clustering or partitioning, and smart caching based on actual usage patterns.

4️⃣ Zero-copy security

Use SSO via your identity provider and enforce RLS or CLS in the warehouse. Keep audit trails and lineage by default.

5️⃣ App-like UX

Design for sub-second key paths, guided flows, writeback, and role-aware views. Treat dashboards as products, not files.

6️⃣ Open by design

Pick API-first, embeddable components. Keep your options open for AI models, data platforms, and hosting choices.

Conclusion: Don’t move boxes, move the needle

Cloud migration is inevitable. Modernizing while you migrate is optional and decisive. Do it once, do it right, and you will not be funding another migration in three years.

Teams that modernize while they migrate, shifting logic left, centralizing metrics, engineering for concurrency, enforcing security at the data layer, and shipping app-like UX, do not just keep up. They design for what is next.

“Don’t solve just today’s problem. Design for how it will look in three to four years, you don’t want to fund another migration.” - Alejandro Martinez

The winners approach BI as a product, not a project. They measure cost to value, and they keep their stack open enough to follow the best ideas, including AI, wherever they emerge.

Want to see modern BI in action? Request a demo with the Astrato team!

.avif)